Ah yes, the scanner software company Thorn that’s trying to lobby chat control into existence in the EU. Sorry but I don’t trust thmn or their surveys.

That said, spreading deepfakes of others without their consent is obviously wrong.

#nobridge

Ah yes, the scanner software company Thorn that’s trying to lobby chat control into existence in the EU. Sorry but I don’t trust thmn or their surveys.

That said, spreading deepfakes of others without their consent is obviously wrong.

Nevermind, it’s been abandoned by the company that contributed the most to it.

https://lwn.net/Articles/882460/

I haven’t tried it myself but there is libreoffice online

https://www.libreoffice.org/download/libreoffice-online/

https://hub.docker.com/r/libreoffice/online/

Their site works fine without allowing javascript, that way it turns into quite a simple thing too!

Does that mean that you consider the temporary loss of her voice the same harm as if she would’ve lost access permanently?

Do keep in mind I do not believe the banning to be ok either - but I’d rather have a company where the human factor sometimes fails that can properly undo their mistake and apologize than something like Meta where you cannot even get in touch with a human if something gets flagged.

The extreme of a company that never does a mistake would of course be the best but that’s never going to happen.

I hope for the self hosted solution that @singletona@lemmy.world mentioned to become reality, both for people like Joyce and because it would be a step towards self hosted voice assistants for those of us that refuse to use cloud based ones.

When I first asked Sophia Noel, a company representative, about the incident, she directed me to the company’s prohibited use policy.

There are rules against threatening child safety, engaging in illegal behavior, providing medical advice, impersonating others, interfering with elections, and more.

But there’s nothing specifically about inappropriate language. I asked Noel about this, and she said that Joyce’s remark was most likely interpreted as a threat.

[…]

Joyce doesn’t hold a grudge—and her experience is far from universal.

Jules uses the same technology, but he hasn’t received any warnings about his language—even though a comedy routine he performs using his voice clone contains plenty of curse words, says his wife, Maria.

He opened a recent set by yelling “Fuck you guys!” at the audience—his way of ensuring they don’t give him any pity laughs, he joked.

That comedy set is even promoted on the ElevenLabs website.Blank says language like that used by Joyce is no longer restricted.

“There is no specific swear ban that I know of,” says Noel.

That’s just as well.

And then she got an apology and got her account reinstated by ElevenLabs.

SnappyMail seem to be a fork of Rainloop and both Rainloop and Snappymail appear to allow multiple providers - https://snappymail.eu/

Cypht seems to be a similar solution where you selfhost a webserver that acts as a web client to external email providers - https://www.cypht.org/documentation/

I find nothing about push notifications for either of those solutions though, and I’m not sure about how much the webclients cache.

That they do, but your contacts doesn’t have to get it anymore.

A self-hosted matrix stack built from source with matrix clients built from source with e2ee implemented that you yourself have the competence to verify the encryption and safety of would be the only secure communication I know of if you don’t want to trust a third party.

Yeah, the glaring problem of having to share your phone number is gone too:

https://support.signal.org/hc/en-us/articles/6712070553754-Phone-Number-Privacy-and-Usernames

OpenAI does not make hardware.

Yeah, I didn’t mean to imply that either. I meant to write OneAPI. :D

It’s just that I’m afraid Nvidia get the same point as raspberry pies where even if there’s better hardware out there people still buy raspberry pies due to available software and hardware accessories. Which loops back to new software and hardware being aimed at raspberry pies due to the larger market share. And then it loops.

Now if someone gets a CUDA competitor going that runs equally well on Nvidia, AMD and Intel GPUs and becomes efficient and fast enough to break that kind of self-strengthening loop before it’s too late then I don’t care if it’s AMDs ROCm or Intels OneAPI. I just hope it happens before it’s too late.

That do sound difficult to navigate.

With OpenAPI OneAPI being backed by so many big names, do you think they will be able to upset CUDA in the future or has Nvidia just become too entrenched?

Would a B580 24GB and B770 32GB be able to change that last sentence regarding GPU hardware worth buying?

I don’t have any personal experience with selfhosted LMMs, but I thought that ipex-llm was supposed to be a backend for llama.cpp?

https://yuwentestdocs.readthedocs.io/en/latest/doc/LLM/Quickstart/llama_cpp_quickstart.html

Do you have time to elaborate on your experience?

I see your point, they seem to be investing in every and all areas related to AI at the moment.

Personally I hope we get a third player in the dgpu segment in the form of Intel ARC and that they successfully breaks the Nvidia CUDA hegemony with their OneAPI:

https://uxlfoundation.org/

https://oneapi-spec.uxlfoundation.org/specifications/oneapi/latest/introduction

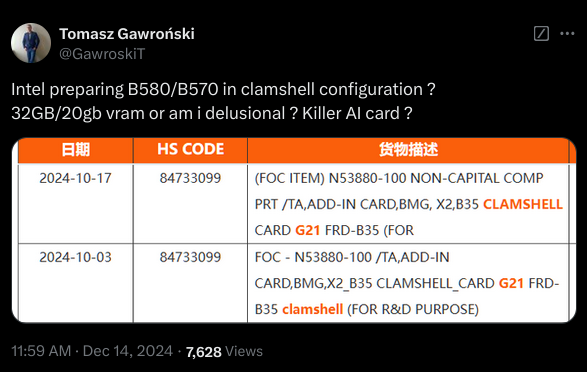

All GDDR6 modules, be they from Samsung, Micron, or SK Hynix, have a data bus that’s 32 bits wide. However, the bus can be used in a 16-bit mode—the entire contents of the RAM are still accessible, just with less peak bandwidth for data transfers. Since the memory controllers in the Arc B580 are 32 bits wide, two GDDR6 modules can be wired to each controller, aka clamshell mode.

With six controllers in total, Intel’s largest Battlemage GPU (to date, at least) has an aggregated memory bus of 192 bits and normally comes with 12 GB of GDDR6. Wired in clamshell mode, the total VRAM now becomes 24 GB.

We may never see a 24 GB Arc B580 in the wild, as Intel may just keep them for AI/data centre partners like HP and Lenovo, but you never know.

Well, it would be a cool card if it’s actually released. Could also be a way for Intel to “break into the GPU segment” combined with their AI tools:

They’re starting to release tools to use Intel ARC for AI tasks, such as AI Playground and IPEX LLM:

https://game.intel.com/us/stories/introducing-ai-playground/

https://www.intel.com/content/www/us/en/products/docs/discrete-gpus/arc/software/ai-playground.htmlhttps://game.intel.com/us/stories/wield-the-power-of-llms-on-intel-arc-gpus/

https://github.com/intel-analytics/ipex-llm

I would go for registering my own domain and then rent a small vps and run debian 12 server with bind9 for dns + dyndns.

If you don’t want to put the whole domain on your own name servers then you can always delegate a subdomain to the debian 12 server and run your main domain on your domain registrators name servers.

edit:

If your registrar is supported the ddns-updater sounds a lot easier.

Hairpin NAT/NAT Reflection can make the experience of visiting the WAN IP from the LAN a different one then if you do it from somewhere else. Or what is your what?

First off, check that it is also true when using a device outside the LAN. Easiest would be to check with your phone with wifi off. You probably won’t get to the login.

If you do then it’s time to check firewall settings.

A DIY solution like your home server is great. I’m just adverse to recommending it to someone who need to ask such an open ended question here. A premade NAS is a lot more plug n play.

Personally I went with an ITX build where I run everything in a Debian KVM/qemu host, including my fedora workstation as a vm with vfio passthrough of a usb controller and the dgpu. It was a lot of fun setting it up, but nothing I’d recommend for someone needing advice for their first homelab.

I agree with your assessment of old servers, way too power hungry for what you get.

A simple way to ensure your selfhosting is easy to manage is to get a NAS for storage and then other device(s) for compute. For your current plans I think you’d get far with a Synology DS224+ (or DS423+ if you want more disk slots).

Then when the NAS starts to be not enough you can add an extra device for compute (a mini pc or whatever you want) and let that device use the NAS as a storage.

Oh and budget to buy at least one large USB Drive to use as a backup, even if your NAS runs a redundant RAID.

I also tend to fall back to Clonezilla. I don’t feel that the Rescuezilla GUI adds much.

Regarding compatibility both the latest Rescuezilla (since September 2024) and Clonezilla (Since July 2024) uses partclone 0.3.32 so they should once again be compatible.

https://github.com/rescuezilla/rescuezilla/releases

The very same.

They’re lobbying the EU for backdoors in e2ee so they can sell their tech stack to scan all our private communication. https://www.thorn.org/solutions/for-platforms/